Building RAG-Based Applications for Production

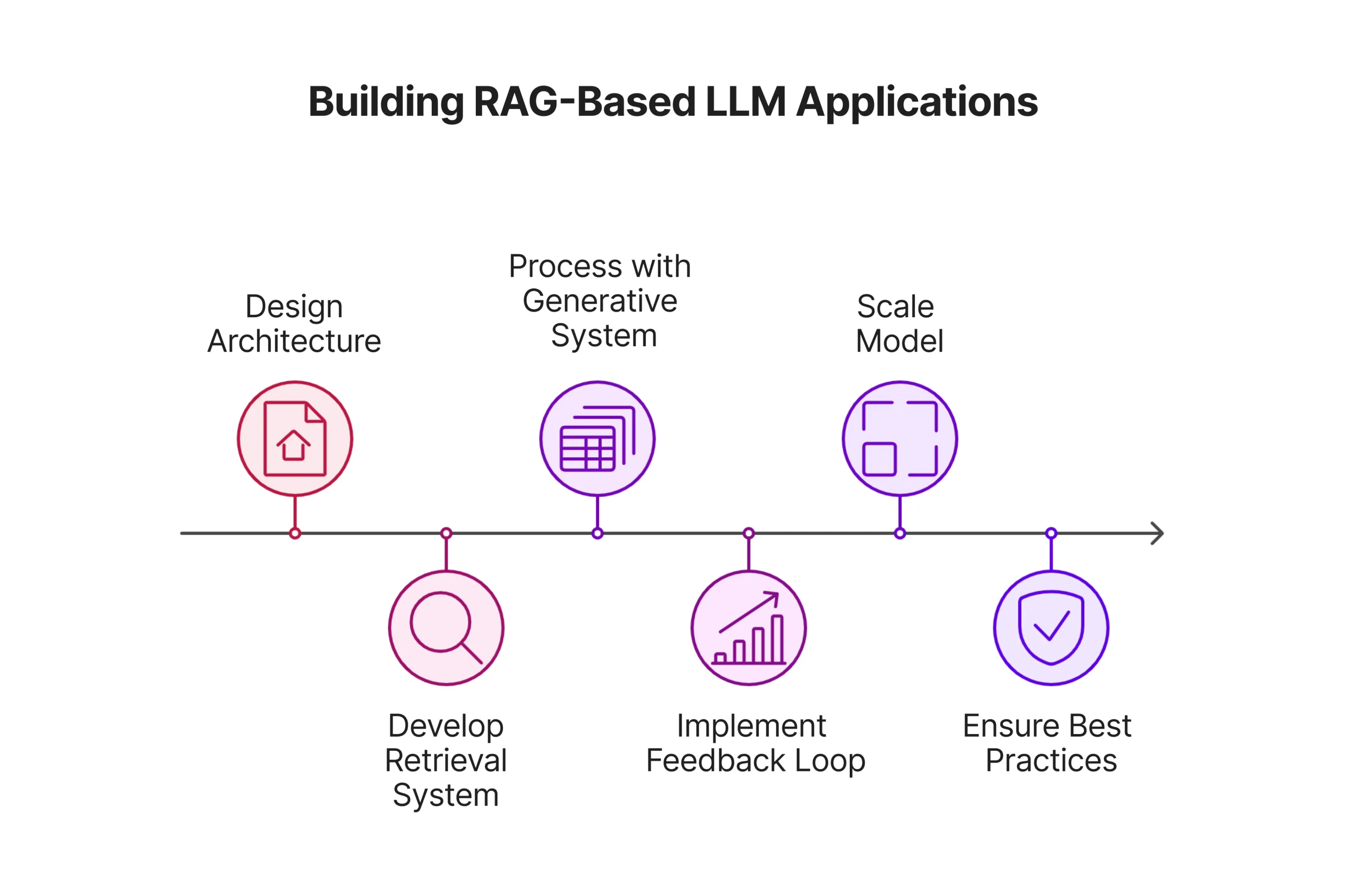

It can be observed that the RAG-based application can be fine-tuned for a broad spectrum of tasks pursuant to specific needs. In order to construct RAG-based Language Model (LLM) applications for production from scratch, tedious precautionary steps are required. First and foremost, the architecture should be cautiously designed. This is done by breaking down the system into distinct parts that in the end are able to interact coherently. Such components are the retrieval layer, generative layer, feedback loop, and the external knowledge base. Each of these key components play individualistic roles that seamlessly integrate as one. Thus, it is crucial to explore each layer in order to understand its function and purpose. This shifts into the second phase of building RAG-based LLM applications for production, which is the development workflow. Upon receiving input from a user, the retrieval system will first gather relevant information from external sources such as databases or search engines, to which the information is then transferred to the generative system such as GPT that processes the knowledge into a comprehensible response as an output. The feedback loop plays the role of continuously improving the system’s accuracy based on the user’s interaction behavior and feedback, whereas the external knowledge base serves the purpose being the information repository for the retrieval step to take place. Proceeding to the final step in building RAG-based LLM applications for production, developers ought to scale the model according to their needs. A few of the best practices for constructing efficient RAG applications is to have proper indexing using vector search or FAISS as well as data vectorization techniques. This allows the applications to be scaled to manage large databases as well as high user traffic. It should be taken note that it is also best practice to have consistent cycles of monitoring and feedback in order to scrutinize for performance bottlenecks. Another crucial practice to adhere to is ensuring data security and privacy measures are in place as well as compliance to the General Data Protection Regulation (GDPR) and other pre-existing regulations.

How to Get Started with RAG Application

Upon having precautionary notes in place, the steps to building a RAG application require attention-to-detail, especially if it is the first attempt. Although there are tutorials that act as a guidance in building RAG-based applications, developers still ought to first determine the goal of the RAG-based application which ranges from content generation to personalized assistants. Shifting into the development process, the retrieval and generative approach should be chosen with care. The approaches could be traditional such as TF-IDF for the retrieval component and a GPT-3/4 for the generative component. These approaches are then to be constructed into the retrieval and generative components respectively to which each model requires commitment into ensuring they operate in an efficient and optimized manner. For instance, preprocessing of documents should be conducted for the retrieval model by means of cleaning documents of irrelevant information for retrieval; whereas for the generative component, developers are recommended to fine-tune the model on domain-specific data to better advance how it generates responses with niche parameters of content. Last but not least, the components are to be pipelined with one another for a seamless integration. It goes without saying that developers should take an extra effort to monitor the retrieval-augmented generation process of the application to ensure its performance is up to the standards expected. This can be done by utilizing available tools such as Kubernetes to orchestrate the deployment of the RAG-based application within a cloud environment, or even Streamlit to prototype the application before the implementation process.

Advanced Concepts: Evaluating and Improving RAG Applications

Upon building a functioning RAG-based application, it is essential to conduct assessments as to its performance and efficiency. The operation of a RAG-based application can be measured by several evaluation metrics such as retrieval performance. This method focuses on the recall and precision of the retrieval model within the RAG-based application for the purpose of evaluating the system’s response efficiency. It assesses the time taken for the application to retrieve relevant information as well as the accuracy and diversity of content retrieved. Such considerations are taken into account due to the system’s supposed role in retrieving precise and verified data from overwhelming databases in order to meet the users’ query needs. Aside from assessing the retrieval model, the generation model can be evaluated as well by looking into the generative accuracy and perplexity. For RAG-based LLM applications, the Recall-Oriented Understudy for Gisting Evaluation (ROUGE) exists as a helpful tool in assisting the comparisons of n-grams overlaps between the referred texts and the generated output. Another example of an evaluation metric would be the human evaluation. This assessment process is conducted by means of a person evaluating how natural and coherent the responses generated by the application. This metric measures the fluency and informativeness of the RAG-based application so that it is able to provide a well-rounded user experience as a whole. Measuring the efficiency of the built RAG-based application is essential to understand its abilities as well as its limitations to make room for consistent improvement within the system.

Improving the RAG-based application can be conducted by optimizing the retrieval component as well as the generative component. The former is commonly improved by using better fine-tuned embedding models such as BERT for more precise linguistic similarities, or even by expanding the data coverage and diversity by integrating a wider parameter of external knowledge. On the other hand, the latter model can be improved by reducing the possibilities of hallucinations by having fact-checking mechanisms in place to ensure the generated responses are grounded by pre-existing knowledge from the retrieved data. Furthermore, developers have the liberty to better advance the collaboration between the retrieval and generative model by refining the retrieval-augmented generation process together to have a more seamless interaction. Feedback loops can also be assembled by allowing the generative component to influence the retrieval component in guiding it to more reliable sources of data.

Transformative Applications Across Industries

In light of the vast benefits that come along with RAG-based applications, global sectors have already begun to implement the system within their fields to optimize its competitive advantages. For instance, there has been an uprising trend within the healthcare industry in utilizing RAG-based applications to assist with patient care. It allows healthcare providers to have real-time retrieval of medical information albeit general or niche to which they are able to use such informative data to make proper decisions concerning diagnostics and treatments. Education fields are also adapting with RAG-based applications into learning tools to have an enhanced learning experience. The applications are now refined into tutoring systems for real-time assistance with queries by students and teachers as well as dynamic content generation for research-based summaries or explanations. The efficiency in the retrieval component of the RAG-based application allows users to save the time needed for researching primary and secondary sources of information. Moreover, the RAG application’s architecture also benefits the finance industry in assessing risks and detecting potential frauds on a day-to-day basis. This acts as a safety net for users within the finance industry to have a real-time research system in assisting them with making informed and weighty decisions. Based on how the RAG-based applications’ mechanisms are optimized by industries daily, it can be observed that the architecture operates in a flexible manner to be adaptable in many fields. The retail industry for instance is also able to utilize the system to assist with inventory insights for better management as well as relying on the technology to make personalized industrial recommendations when needed.