How building an open-source MLOps platform on AWS can improve your company’s efficiency

Jul 03, 2024

The benefits from using MLOps software for data science have been addressed and recognized for years, regardless of what AI/ML type the engineers are practicing. It reached a point where it slowly became a standard that releases the data scientists from the burden of having to manually track their experiments, manually version their assets (datasets, models), maintaining the codebase for serving the models and so on.

Usually after recognizing the theoretical advantages of using MLOps, when an inexperienced data scientist wants to use it in its everyday work, it turns to small open-source software tools that have a wide community support, and on top of it can be used on their local environments or within their notebooks. In general, mainly for personal purposes.

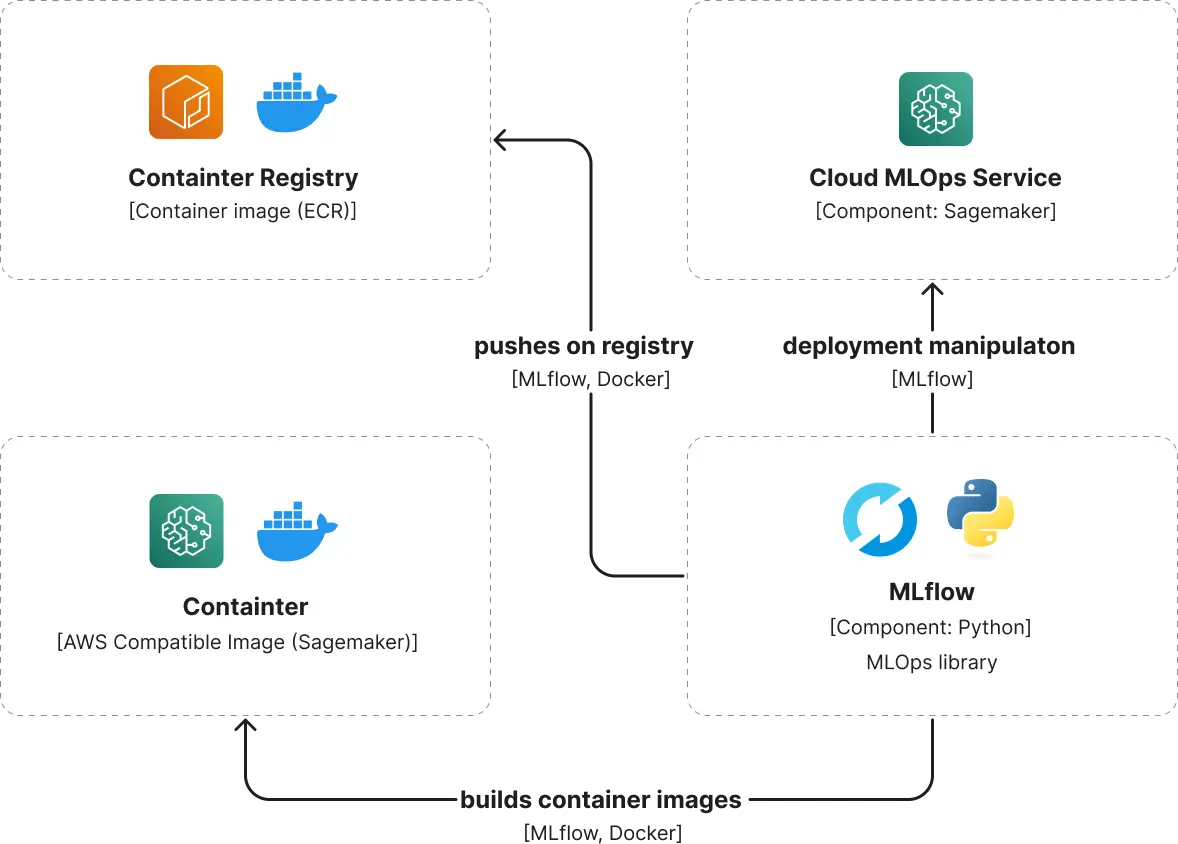

One such MLOps software that has been extremely popular for the last 5-6 years is MLflow. It goes beyond solving the previously mentioned pain points of the data scientists’ work by providing model evaluation, model packaging and model deployment as well. For example, one popular way of automatically achieving compatibility of almost any model with another MLOps service from AWS (Sagemaker) that the data science teams in Intertec have exploited, is to build a Sagemaker compatible image, with an option to push it to ECR (Elastic Container Registry), and then deploy it on Sagemaker by creating an endpoint.

What is MLOps?

MLOps stands for Machine Learning Operations. It's like the behind-the-scenes conductor for machine learning models. Just like how a symphony needs a conductor to ensure all instruments play harmoniously, MLOps ensures that machine learning models are developed, deployed, and managed smoothly. It covers everything from data preparation and model training to deployment and monitoring, making sure everything runs smoothly so the models can perform at their best.

The benefits of MLOps and machine learning development services for your business

Discover how MLOps can transform your business operations:

- Efficiency: MLOps helps data teams build and deploy machine learning models faster, ensuring they're high-quality and ready for use sooner.

- Scalability: With MLOps, managing and scaling thousands of models becomes manageable. It keeps everything running smoothly from development to deployment, supporting continuous integration and delivery.

- Collaboration: MLOps fosters better teamwork between data scientists and IT teams. By ensuring ML pipelines are reproducible, it minimizes conflicts and speeds up the release of new features.

- Risk management: Machine learning models often face regulatory scrutiny. MLOps provides transparency and tools to monitor models for issues like data drift, helping companies stay compliant and responsive.

- Operational stability: By integrating MLOps practices, organizations can maintain model performance over time, ensuring that models continue to deliver accurate results and value.

Building an OSS MLOps platform through machine learning development services as an AWS partner

To create a robust and centralized OSS MLOps platform on AWS, we partnered with an AWS-certified partner. Their expertise in both AWS infrastructure and MLOps tools helped us streamline the process and avoid common pitfalls. By collaborating with them, we ensured that our setup was optimized for scalability, security, and performance, benefiting from their extensive experience in deploying similar solutions.

Streamlining data science: How MLOps tools like MLflow are transforming work for data scientists

Using MLOps software in data science has become a recognized standard, freeing data scientists from manual tracking, versioning, and code maintenance. Newcomers often start with open-source tools for personal use, like MLflow, which enhances model evaluation, packaging, and deployment. For instance, teams at Intertec leverage MLflow to streamline compatibility with AWS Sagemaker, simplifying model deployment. Explore more in Intertec's guide to the machine learning lifecycle.

Example of converting a trained Xception CNN model for clothes classification

This is made through CNN model for clothes classification whose artifacts are stored in an S3 location, into a Sagemaker compatible model and deploying it with just several lines of code:

# mlflow models build-docker --model-uri {S3_URI} --name "mlflow-sagemaker-xception:latest"

# mlflow sagemaker build-and-push-container --build --push -c mlflow-sagemaker-xception

mlflow deployments create --target sagemaker --name mlflow-sagemaker-xception --model-uri {S3_URI} -C region_name={AWS_REGION} -C image_url={ECR_URI} -C execution_role_arn={SAGEMAKER_ARN}

If you are curious about more examples of MLflow usage for AWS and specifically Sagemaker, I encourage you to check out the 2nd edition of Julien Simon’s book “Learn Amazon Sagemaker”.

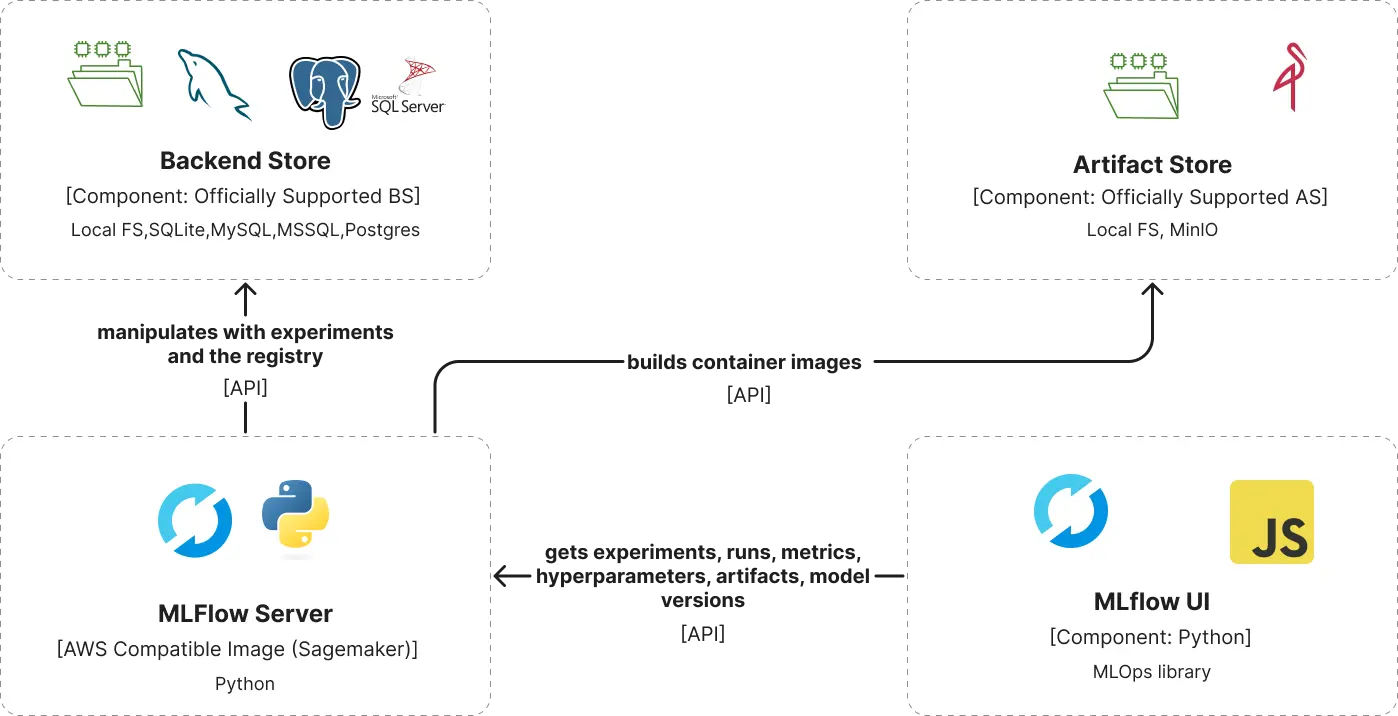

MLflow’s compatibility aspects extend to more services within the AWS ecosystem. Since MLflow is not just an ordinary library, but a server with a UI that connects to different storages for its artifact store and different databases for its backend store, there are multiple options to choose from. Yet the data scientists usually start by running the server locally without customizing the setup, choosing the local or the notebook’s file system for both the artifact store and the backend store. In some cases they configure the MLflow to use a local database (SQLite, MySQL, MSSQL, Postgre) as backend store or MinIO as artifact store.

This setup works perfectly for them on a personal basis, but problems arise when they want to expose their progress or work within a team.

The challenge of dispersed tool usage: Simplifying your strategy

In a team or company providing AI/ML services, like Intertec, having every data scientist manage their own MLOps tools for an extended period isn’t sustainable. This leads to assets being non-reusable, experiments needing manual sharing, and permissions being set repeatedly across platforms. Even within the same team, without a centralized MLOps setup, efforts are scattered, making it difficult for team members to seamlessly pick up where others left off.

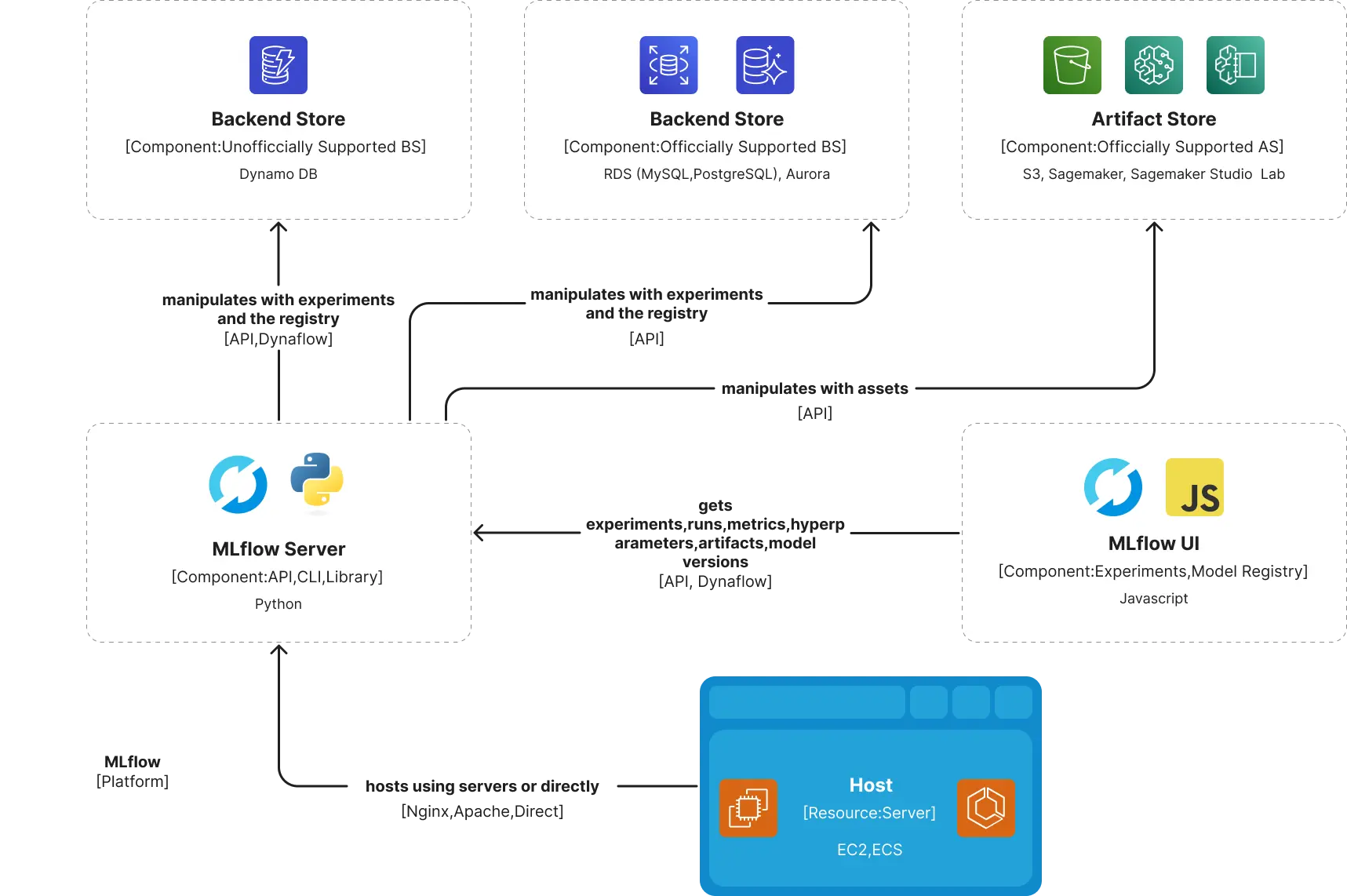

At Intertec, most data scientists were already proficient with MLflow. Switching to a new MLOps software would have required a significant adjustment period. Therefore, we opted to maintain MLflow as our preferred platform. Given that AWS is our primary cloud provider, we leverage its diverse infrastructure services to build our centralized MLflow platform. Even for AWS services that are not officially supported by MLflow, successful setup can be done, implementing the backend store with DynamoDB NoSQL database.

Some of Intertec’s partners and clients, whose infrastructure relies on AWS, recognized the necessity and benefits of a centralized MLOps platform after witnessing our success. They adapted our approach to fit their specific hosting requirements for MLflow. Intertec has achieved successful implementation both in-house and for clients, demonstrating the scalability and effectiveness of our approach.

Setting up MLflow on AWS: Simplifying the cloud operations

With an experienced DevOps team familiar with AWS and MLflow’s capabilities, the setting up on AWS cloud proceeded smoothly. Throughout maintenance, minor challenges arose, such as upgrading the backend store after MLflow updates. AWS provides a range of resources compatible with MLflow’s requirements in order to overcome those challenges. Here are our recommended configurations:

- Opt for smaller EC2 instances for running the MLflow server. MLflow’s lightweight nature doesn’t demand high-capacity instances.

- Choose RDS (MySQL or PostgreSQL) as the backend store. Even with extensive experiment logging, the data volume rarely justifies the complexities of NoSQL databases, making SQL options more suitable.

- Use S3 as the artifact store. Even if you prefer not to load artifacts directly through MLflow, accessing them from S3 remains efficient.

For Intertec’s in-house projects and research, this setup—featuring RDS (PostgreSQL) for the backend store, S3 for artifacts, and a small EC2 instance—proved to be straightforward and effective.

Intertec also prepared an alternative MLflow setup on Kubernetes, aimed at cloud-agnostic deployments. Deployed on EKS with MinIO for artifacts and SQLite for the backend store, this setup incorporates additional ML tools like Label Studio and JupyterHub, creating a comprehensive MLOps platform.

For one of our clients, integrating MLflow into their dedicated ECS cluster for AI/ML services proved essential. The MLflow server was specifically designated for their production AWS account, leveraging MLflow's internal environment management capabilities. To facilitate communication between services across different AWS accounts and the MLflow server, cross-account resource access was enabled using IAM services.

Access to the MLflow UI is facilitated through an Application Load Balancer (ALB), utilizing dynamic host port mapping for ECS tasks. Within the task definition, networking is configured in bridge mode to ensure seamless integration.

The other parts of the MLflow setup rely on S3 for the artifact store and RDS (MySQL) for the backend store. Automatic maintenance for the backend store is done within the MLflow Docker file itself.

FROM python:$PYTHON_BASE_IMAGE-slim

RUN pip install mlflow==$MLFLOW_VERSION

RUN pip install pymysql==$PYMYSQL_VERSION

RUN pip install boto3==$BOTO3_VERSION

EXPOSE $MLFLOW_INTERNAL_PORT

CMD mlflow db upgrade mysql+pymysql://$_MLFLOW_RDS_MYSQL_USER_:$_MLFLOW_RDS_MYSQL_PASS_@$_MLFLOW_RDS_MYSQL_HOST_:3306/backendstore && mlflow server --default-artifact-root s3://$_MLFLOW_S3_BUCKET_/mlflow --backend-store-uri mysql+pymysql://$_MLFLOW_RDS_MYSQL_USER_:$_MLFLOW_RDS_MYSQL_PASS_@$_MLFLOW_RDS_MYSQL_HOST_:3306/backendstore --host $MLFLOW_INTERNAL_HOST

At Intertec, we have leveraged MLflow on AWS for over 5 years, supporting our clients for nearly 4 years. During this time, we've encountered minimal issues and found that maintaining MLflow on AWS is remarkably efficient. This setup has greatly streamlined our operations, consistently accelerating time-to-market for numerous projects. Are you a machine learning-driven company seeking skilled personnel or exploring automation with machine learning? Learn more here.

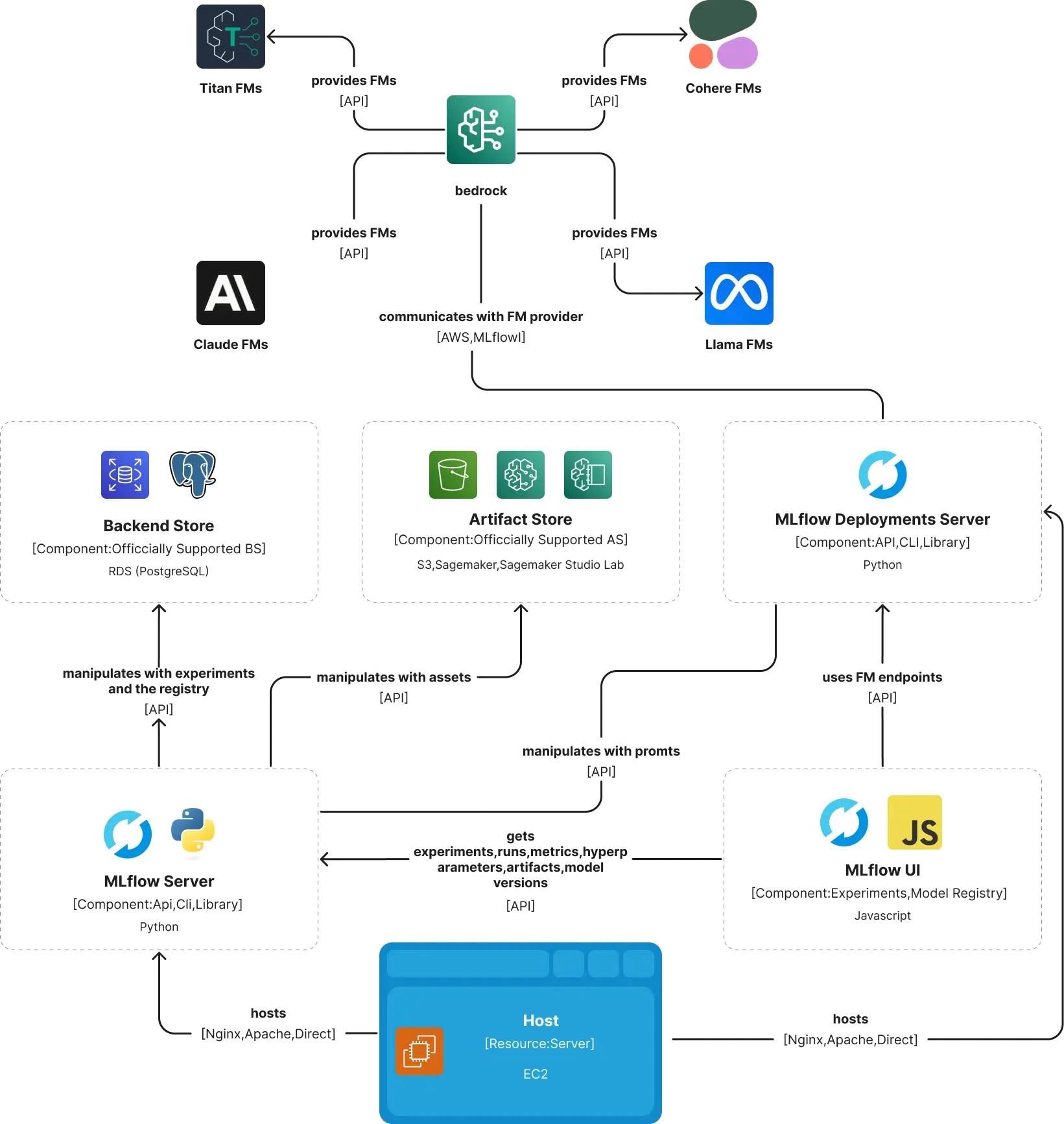

As we embrace GenAI and MLOps technology, our engineers and cloud providers are adapting to these advancements. MLOps platforms are evolving with GenAI capabilities, our engineers are enhancing their skills for rapid engineering, and cloud providers are rolling out new services to support emerging foundational models.

MLflow initially enabled us to log prompts like traditional ML models. It later introduced the Deployments Server to manage large GenAI models effectively, integrating with RAG frameworks/providers. Concurrently, AWS launched Bedrock, a service designed for foundational models, offering support for fine-tuning and RAG integration. Bedrock's synergy with MLflow makes it ideal for ongoing maintenance.

For instance, here's a Docker file snippet for deploying the Deployments Server on EC2, complementing our existing MLflow setup:

FROM python:$PYTHON_BASE_IMAGE-slim

RUN pip install mlflow[genai]==$MLFLOW_VERSION

EXPOSE $MLFLOW_INTERNAL_PORT

CMD mlflow deployments start-server --port $MLFLOW_INTERNAL_PORT --host $MLFLOW_INTERNAL_HOST --workers $NUMBER_OF_WORKERS

Final thoughts

As we navigate the evolution of MLOps on AWS, our journey with MLflow has been pivotal at Intertec. Over the years, we've seen firsthand how integrating robust MLOps tools enhances efficiency and collaboration for data scientists. By leveraging AWS's scalable infrastructure and MLflow's versatile capabilities, we've streamlined operations and accelerated project timelines.

Looking forward, as MLflow introduces new GenAI features and AWS expands its support with services like Bedrock, we're poised to harness these advancements. Our focus remains on developing innovative AI applications and adapting to emerging technologies, ensuring that our clients benefit from cutting-edge solutions.

Join us in embracing the future of MLOps, where technology meets possibility, and together, let's redefine what's achievable in AI and machine learning.

Velimir Graorkoski

Book a Free Consultation

Trusted by leading businesses worldwide

Book a Free Consultation

You may also like

Towards Perfect ES Query Generation with Elastic-builder

Apr 09, 2020

Velimir Graorkoski

Elastic Builder To The Rescue

Apr 22, 2020

Velimir Graorkoski

Understanding the Most Effective Practices of Remote Working

May 19, 2020

Tanja Zlatanovska